The DevOps 3 Ways - Remove The Software Delivery Plagues

A Successful Software Project Delivery

Timely software delivery does not mean your vendor

has delivered everything you ordered. Even if they have

delivered, there is no guarantee that the product will succeed or that the product delivered plays a positive role in the project's success. And a product will succeed only when it can provide enough value to its users. So the software vendors must take care of the value for successful project delivery.

Value and Value Stream

A product with proper value delivered within an acceptable timeframe is the only key to product success. It must deliver value to its end users. A value is something that adds benefit to the final user. Many other factors affect the product's success, but a product delivered on time or beyond time but with little value for its end-users can be considered rubbish and will surely fail.

We must take care of the value stream to add good value to your product. In DevOps, we typically define our technology value stream as the process required to convert a business hypothesis into a technology-enabled service that delivers value to the customer. We can’t expect the MI phone factory to deliver an iPhone's quality. The value stream of delivering an iPhone quality must be different.

So we must consider both delivery time and value when addressing the delivery issue.

Outdated value stream, chronic conflict and lack of new ideas are to be blamed

Almost every IT organization has an inherent conflict between Development and IT Operations. This creates a downward spiral, resulting in ever-slower time to market for new products and features, reduced quality, increased outages, and, worst of all, an ever-increasing amount of technical debt. The term “technical debt” was first coined by Ward Cunningham. Analogous to financial debt, technical debt describes how decisions we make lead to problems that get increasingly more difficult to fix over time, continually reducing our options in the future—even when taken on judiciously, we still incur interest.

Cost continues to increase. Delivery continues to decrease. As a situation comes, things become unmanageable. The root problem lies with outdated value streams, chronic conflict and lack of new ideas.

The DevOps 3 ways

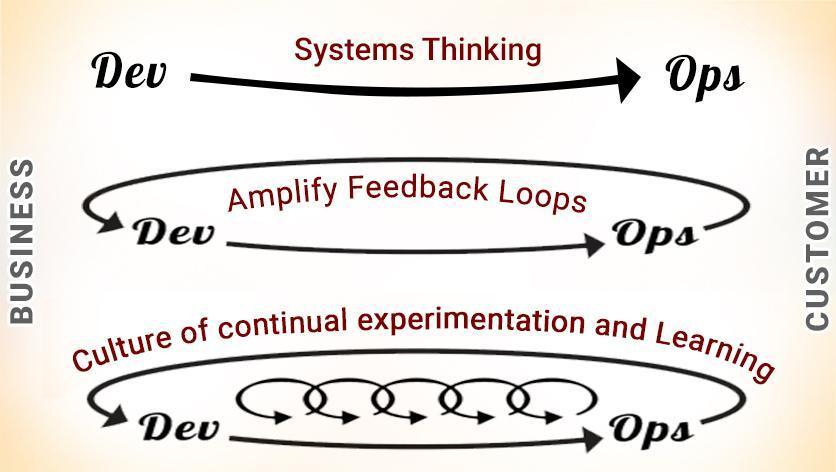

DevOps solves this delivery plague in three ways. Here are those

- Flow

- Feedback

- Continual Experimentation

Problems happen when a process is stopped. Or someone is stuck for any reason. When a process flow is stopped or someone is stuck, all processes or people in the dependency chain get affected. So the problem gets worse exponentially. The immediate effect is project delay. Long term effect is delivery with poor quality and reduced value.

Theory of flow

The First Way emphasizes the performance of the entire system, as opposed to the performance of a specific silo of work or department — this as can be as large a division (e.g., Development or IT Operations) or as small as an individual contributor (e.g., a developer, system administrator).

The focus is on all business value streams that are enabled by IT. Ideally, the system should cover all the functional areas and remove all possible manual work by properly automating the workflow. In other words, it begins when requirements are identified (e.g., by the business or IT), is built into Development, and then transitioned into IT Operations, where the value is delivered to the customer as a service. This is an implementation of “System Thinking” as defined by Peter Senge in his revolutionary book “FIFTH DISCIPLINE”. This is done by interconnecting all the related departments through IT-enabled services, making the work visible, always seeking to increase flow, and achieving a profound understanding of the system.

A known defect never passes to downstream work centres and never allows a local optimization to create global degradation(as per Deming).

MAKE OUR WORK VISIBLE

A significant difference between technology and manufacturing

value streams is that our work is invisible. Unlike

physical processes, in the technology value stream, we cannot easily see

where flow is being impeded or when work is piling up in front of

constrained work centres. Transferring work between work centres is

usually highly visible and slow because inventory must be physically

moved.

However, in technology work, the move can be made with a click of a button, such as by re-assigning a work ticket to another team. Because it is so easy, work can bounce between teams endlessly due to incomplete information, or work can be passed onto downstream work centres with problems that remain utterly invisible until we are late delivering what we promised to the customer or our application fails in the production environment.

To help us see where work is flowing well and where work is queued or stalled, we need to make our work as visible as possible. One of the best methods of doing this is using visual work boards, such as kanban boards or sprint planning

LIMIT WORK IN PROCESS (WIP)

In manufacturing, daily work is typically dictated by a production

schedule that is generated regularly (e.g., daily, weekly), establishing

which jobs must be run based on customer orders, order due dates, parts

available, and so forth.

In technology, our work is usually far more dynamic—especially in shared services, where teams must satisfy the demands of many different stakeholders. As a result, daily work becomes dominated by the priority du jour, often with requests for urgent work through every communication mechanism possible, including ticketing systems, outage calls, emails, phone calls, chat rooms, and management escalations.

Disruptions in manufacturing are also highly visible and costly, often requiring breaking the current job and scrapping any incomplete work to start the new job. This high level of effort discourages frequent disruptions.

However, interrupting technology workers is easy because the consequences are invisible to almost everyone, even though the negative impact on productivity may be far more significant than in manufacturing. For instance, an engineer assigned to multiple projects must switch between tasks, incurring all the costs of re-establishing context and cognitive rules and goals.

Studies have shown that the time to complete even simple tasks, such as sorting geometric shapes, significantly degrades when multitasking. Of course, because our work in the technology value stream is far more cognitively complex than sorting geometric shapes, the effects of multitasking on process time is much worse.

We can limit multitasking when we use a kanban board to manage our work, such as by codifying and enforcing WIP (work in progress) limits for each column or work centre that puts an upper limit on the number of cards that can be in a column.

For example, we may set a WIP limit of three cards for testing. When three cards are already in the test lane, no new cards can be added unless a card is completed or removed from the “in work” column and put back into the queue (i.e., putting the card back to the column to the left). Nothing can be worked on until it is represented first in a work card, reinforcing that all work must be made visible.

Dominica DeGrandis, one of the leading experts on using kanbans in DevOps value streams, notes that “controlling queue size [WIP] is an extremely powerful management tool, as it is one of the few leading indicators of lead time—with most work items, we don’t know how long it will take until it’s completed.”

Limiting WIP also makes it easier to see problems that prevent the completion of work.† For instance, when we limit WIP, we may have nothing to do because we are waiting on someone else. Although it may be tempting to start new work (i.e., “It’s better to be doing something than nothing”), a far better action would be to find out what is causing the delay and help fix that problem. Bad multitasking often occurs when people are assigned to multiple projects, resulting in many prioritization problems.

REDUCE BATCH SIZES

Another key component to creating smooth and fast flow is performing

work in small batch sizes. Before the Lean manufacturing revolution,

it was common practice to manufacture in large batch sizes (or lot

sizes), especially for operations where job setup or switching between

jobs was time-consuming or costly. For example, producing large car body

panels requires setting large and heavy dies onto metal stamping

machines, which could take days. When changeover cost is so

expensive, we would often stamp as many panels at a time as possible,

creating large batches to reduce the number of changeovers.

However, large batch sizes result in skyrocketing WIP levels and high variability in flow that cascade through the entire manufacturing plant. The result is long lead times and poor quality—if a problem is found in one body panel, the entire batch has to be scrapped.

One of the key lessons in Lean is that to shrink lead times and increase quality; we must strive to shrink batch sizes continually. The theoretical lower limit for batch size is single-piece flow, where each operation is performed one unit at a time.‡

The dramatic differences between large and small batch sizes can be seen in the simple newsletter mailing simulation described in Lean Thinking: Banish Waste and Create Wealth in Your Corporation by James P. Womack and Daniel T. Jones.

The large batch strategy (i.e., “mass production”) would be to perform one operation on each of the ten brochures sequentially. In other words, we would first fold all ten sheets of paper, then insert each of them into envelopes, seal all ten envelopes, and stamp them.

On the other hand, in the small batch strategy (i.e., “single-piece flow”), all the steps required to complete each brochure are performed sequentially before starting on the next brochure. In other words, we fold one sheet of paper, insert it into the envelope, seal it, and stamp it—only then do we start the process with the next sheet of paper.

The difference between large and small batch sizes is dramatic (see figure 7). Suppose each of the four operations takes ten seconds for each of the ten envelopes. With the large batch size strategy, the first completed and stamped envelope is produced only after 310 seconds.

Worse, suppose we discover during the envelope sealing operation that we made an error in the first step of folding—in this case, the earliest we would discover the error is at two hundred seconds. We have to refold and reinsert all ten brochures in our batch again.

REDUCE THE NUMBER OF HANDOFFS

In the technology value stream, we often have long deployment lead times measured in months because hundreds (or even thousands) of operations are required to move our code from version

control into the production environment. Transmitting code through the

value stream requires multiple departments to work on various

tasks, including functional testing, integration testing, environment

creation, server administration, storage administration, networking,

load balancing, and information security.

Each time the work passes from team to team, we require all sorts of communication: requesting, specifying, signalling, coordinating, and often prioritizing, scheduling, deconflicting, testing, and verifying. This may require using different ticketing or project management systems; writing technical specification documents; communicating via meetings, emails, or phone calls; and using file system shares, FTP servers, and Wiki pages.

Each step is a potential queue where work will wait when we rely on resources shared between different value streams (e.g., centralized operations). The lead times for these requests are often so long that there is constant escalation to have work performed within the needed timelines.

Even under the best circumstances, some knowledge is inevitably lost with each handoff. With enough handoffs, the work can completely lose the context of the problem being solved or the organizational goal being supported. For instance, a server administrator may see a newly created ticket requesting that user accounts be created, without knowing To mitigate these types of problems, we strive to reduce the number of handoffs, either by automating significant portions of the work or by reorganizing teams so they can deliver value to the customer themselves instead of having to be constantly dependent on others. As a result, we increase the flow.

CONTINUALLY IDENTIFY AND ELEVATE OUR CONSTRAINTS

We must continually identify our system’s constraints and improve its work capacity to reduce lead times and increase throughput. In

Beyond the Goal, Dr Goldratt states, “In any value stream, there is

always a direction of flow, and there is always one and only one

constraint; any improvement not made at that constraint is an illusion.”

If we improve a work centre positioned before the constraint,

work will merely pile up at the bottleneck even faster, waiting for work

to be performed by the bottlenecked work centre. On the other hand, if

we improve a work centre positioned after the bottleneck, it remains

starved, waiting for work to clear the bottleneck. As a solution, Dr

Goldratt defined the “five focusing steps”:

-Identify the system’s constraint.

-Decide how to exploit the system’s constraint.

-Subordinate everything else to the above decisions.

- Elevate the system’s constraint.

- If in the previous steps a constraint has been broken, go back to step one, but do not allow inertia to cause a system constraint.

In typical DevOps transformations, as we progress from deployment lead times measured in months or quarters to lead times measured in minutes, the constraint usually follows this progression:

Environment creation: We cannot achieve deployments

on-demand if we always have to wait weeks or months for production or

test environments. The countermeasure is to create environments that are

on demand and completely self-serviced, so that they are always

available when we need them.

Code deployment: We cannot achieve deployments on demand if each of our production code deployments take weeks or months to perform (i.e., each deployment requires 1,300 manual, error-prone steps involving up to three hundred hundred engineers). The countermeasure is to automate our deployments as much as possible, with the goal of being completely automated so they can be done self-service by any developer.

Test setup and run: We cannot achieve deployments on demand if every code deployment requires two weeks to set up our test environments and data sets, and another four weeks to manually execute all our regression tests. The countermeasure is to automate our tests so we can execute deployments safely and to parallelize them so the test rate can keep up with our code development rate.

Overly tight architecture: We cannot achieve deployments on demand if overly tight architecture means that every time we want to make a code change we have to send our engineers to scores of committee meetings in order to get permission to make our changes. Our countermeasure is to create more loosely-coupled architecture so that changes can be made safely and with more autonomy, increasing

ELIMINATE HARDSHIPS AND WASTE IN THE VALUE STREAM

Shigeo Shingo, one of the pioneers of the Toyota Production System,

believed that waste constituted the largest threat to business

viability—the commonly used definition in Lean is “the use of any

material or resource beyond what the customer requires and is willing to

pay for.” He defined seven significant types of manufacturing waste:

inventory, overproduction, extra processing, transportation, waiting,

motion, and defects.

More modern interpretations of Lean have noted that “eliminating waste” can have a demeaning and dehumanizing context; instead, the goal is reframed to reduce hardship and drudgery in our daily work through continual learning to achieve the organization’s goals. For the remainder of this book, the term waste will imply this more modern definition, as it closely matches the DevOps ideals and desired outcomes.

In the book Implementing Lean Software Development: From Concept to Cash, Mary and Tom Poppendieck describe waste and hardship in the software development stream as anything that causes delay for the customer, such as activities that can be bypassed without affecting the result.

The following categories of waste and hardship come from Implementing Lean Software Development unless otherwise noted:

Partially done work: This includes any work in the value stream that has not been completed (e.g., requirement documents or change orders not yet reviewed) and work that is sitting in queue (e.g., waiting for QA review or server admin ticket). Partially done work becomes obsolete and loses value as time progresses.

Extra processes: Any additional work being performed in a process that does not add value to the customer. This may include documentation not used in a downstream work centre, or reviews or approvals that do not add value to the output. Extra processes add effort and increase lead times.

Extra features: Features built into the service that is not needed by the organization or the customer (e.g., “gold plating”). Extra features add complexity and effort to testing and managing functionality.

Task switching: When people are assigned to multiple projects and value streams, requiring them to context switch and manage dependencies between work, adding additional effort and time into the value stream.

Waiting: Any delays between work requiring resources to wait until they can complete the current work. Delays increase cycle time and prevent the customer from getting

Motion: The effort to move information or materials from one work centre to another. Motion waste can be created when people who need to communicate frequently are not colocated. Handoffs also create motion waste and often require additional communication to resolve ambiguities.

Defects: Incorrect, missing, or unclear information, materials, or products create waste, as effort is needed to resolve these issues. The longer the time between defect creation and defect detection, the more difficult it is to resolve the defect.

Nonstandard or manual work: Reliance on nonstandard or manual work from others, such as using non-rebuilding servers, test environments, and configurations. Ideally, any dependencies on Operations should be automated, self-service, and available on demand.

Heroics: For an organization to achieve goals, individuals and teams are put in a position where they must perform unreasonable acts, which may even become a part of their daily work (e.g., nightly 2:00 a.m. problems in production, creating hundreds of work tickets as part of every software release).§

The 2nd Way: Amplify the Feedback Loop

The Second Way is about creating right-to-left feedback loops. Almost any process improvement initiative aims to shorten and amplify feedback loops so necessary corrections can be continually made.

The outcomes of the Second Way include understanding and responding to all customers, internal and external, shortening and amplifying all feedback loops, and embedding knowledge where we need it.

Two main aspects that matter

- Quicker response to stop things from going out of mind - which leads to relearning and rework

- Better representation (with drawings, point explained docs, screenshots) to help others not to be misguided and misinterpreted -- finally resulting in rework

SEE PROBLEMS AS THEY OCCUR

We must constantly test our design and operating assumptions in a safe work system. We aim to increase information flow in our system from as many areas as possible, sooner, faster, cheaper, and with as much clarity between cause and effect. The more assumptions we can invalidate, the faster we can find and fix problems, increasing our resilience, agility, and ability to learn and innovate. We do this by creating feedback and feedforward loops into our work system. In his book The Fifth Discipline: The Art & Practice of the Learning Organization, Dr Peter Senge described feedback loops as a critical part of learning organizations and systems thinking. Feedback and feedforward loops cause components within a system to reinforce or counteract

each other. In manufacturing, ineffective feedback often contributes to significant quality and safety problems. In one well-documented case at the General Motors Fremont manufacturing plant, there were no effective procedures to detect problems during the assembly process, nor were there detailed procedures on what to do when problems were found. As a result, there were instances of engines being put in backwards, cars missing steering wheels or tires, and cars even having to be towed off the assembly line because they wouldn’t start. In contrast, in high-performing manufacturing operations, there is fast, frequent, and high-quality information flow throughout the entire value stream—every work operation is measured and monitored. Any defects or significant deviations are quickly found and acted upon. These are the foundation for quality, safety, continual learning and improvement.

In the technology value stream, we often get poor outcomes because of the absence of fast feedback. For instance, in a waterfall software project, we may develop code for an entire year and get no feedback on quality until we begin the testing phase—or worse, when we release our software to customers. When feedback is this delayed and infrequent, it is too slow to enable us to prevent undesirable outcomes. In contrast, our goal is to create fast feedback and fast-forward loops wherever work is performed, at all stages of the technology value stream, encompassing Product Management, Development, QA, Infosec, and Operations. This includes the creation of automated build, integration, and test processes so that we can immediately detect when a change has been introduced that takes us out of a correctly functioning and deployable state. We also create pervasive telemetry so we can see how all our system components are operating in the production environment so that we can quickly detect when they are not

SWARM AND SOLVE PROBLEMS TO BUILD NEW KNOWLEDGE

It is not sufficient to merely detect when the unexpected occurs. When problems occur, we must swarm them, mobilizing whoever is required to solve them. According to Dr Spear, the goal of swarming is to contain problems before they have a chance to spread and to diagnose and treat the problem so that it cannot recur. “In doing so,” he says, “[we] build ever-deeper knowledge about how to manage the systems for doing our work, converting inevitable up-front ignorance into knowledge.” The paragon of this principle is the Toyota Andon cord. In a Toyota manufacturing plant, above every work centre is a cord that every worker and manager is trained to pull when

something goes wrong; for example, when a part is defective, when a required part is unavailable, or even when work takes place longer than documented.‡ When the Andon cord is pulled, the team leader is alerted and immediately works to resolve the problem. If the problem cannot be resolved within a specified time (e.g., fifty-five seconds), the production line is halted so that the entire organization can be mobilized to assist with problem resolution until a successful countermeasure has been developed. Instead of working around the problem or scheduling a fix “when we have more time,” we swarm to fix it immediately—this is nearly the opposite of the behaviour at the GM Fremont plant described earlier. Swarming is necessary for the following reasons: It prevents the problem from progressing downstream, where the cost and effort to repair it increases exponentially, and technical debt is allowed to accumulate.

Preventing the introduction of new work enables continuous integration and deployment, which is a single-piece flow in the technology value stream. All changes that pass our continuous build and integration tests are deployed into production. Any changes that cause tests to fail to trigger our Andon cord are swarmed until resolved.

KEEP PUSHING QUALITY CLOSER TO THE SOURCE

We may inadvertently perpetuate unsafe work systems due to how we respond to accidents and incidents. Adding more inspection steps and approval processes in complex systems increases the likelihood of future failures. The effectiveness of approval processes decreases as we push decision-making further away from where the work is performed. Doing so not only lowers the quality of decisions but also increases our cycle time, thus decreasing the strength of the feedback between cause and effect and reducing our ability to learn from successes and failures.¶ This can be seen even in smaller and less complex systems. When top-down, bureaucratic command and control systems become ineffective, it is usually because the variance between “who should do something” and “which is doing something” is too large due to insufficient clarity and timeliness. Examples of ineffective quality controls include:

Examples of ineffective quality controls include:

- Requiring another team to complete tedious, error-prone, and manual tasks that could be easily automated and run as needed by the team who needs the work performed

- Requiring approvals from busy people who are distant from the work, forcing them to make decisions without adequate knowledge of the work or the potential implications, or merely rubber stamping their approvals

- Creating large volumes of documentation of questionable detail which become obsolete shortly after they are written

- Pushing large batches of work to teams and special committees for approval and processing and then waiting for responses

Instead, we need everyone in our value stream to find and fix problems in their area of control as part of our daily work. By doing this, we push quality and safety responsibilities and decision-making to where the work is performed instead of

ENABLE OPTIMIZING FOR DOWNSTREAM WORK CENTERS

In the 1980s, Designing for Manufacturability principles sought to design parts and processes to create finished goods with the lowest cost, highest quality, and fastest flow. Examples include designing wildly asymmetrical parts to prevent them from being put on backwards and designing screw fasteners, so they are impossible to over-tighten. This was a departure from how the design was typically done, which focused on the external customers but overlooked internal stakeholders, such as the people performing the manufacturing.

Lean defines two types of customers we must design for: the external customer (who most likely pays for the service we deliver) and the internal customer (who receives and processes the work immediately after us). According to Lean, our most important customer is our next step downstream. Optimizing our work for them requires that we have empathy for their problems to identify better the design problems that prevent fast and smooth flow.

In the technology value stream, we optimize for downstream work centres by designing for operations. Operational non-functional requirements (e.g., architecture, performance, stability, testability, reconfigurability, and security) are considered handy features. By doing this, we create quality at the source, likely resulting in a set of codified non-functional requirements that we can proactively integrate into every service we build.

Culture of continual Experimentation and Learning

The Third Way is about creating a culture that fosters continual experimentation, taking risks and learning from failure, and understanding that repetition and practice are prerequisites to mastery.

We need both of these equally. Experimentation and taking risks to ensure that we keep pushing to improve, even if it means going deeper into the danger zone than ever. And we must master the skills to help us retreat from the danger zone when we’ve gone too far.

The outcomes of the Third Way include allocating time for improving daily work, creating rituals that reward the team for taking risks and introducing faults into the system to increase resilience.

ENABLING ORGANIZATIONAL LEARNING AND A SAFETY CULTURE

When we work within a complex system, we can't predict ideally all the outcomes of any action we take. This contributes to unexpected, or even catastrophic, outcomes and accidents in our daily work, even when we take precautions and work carefully. When these accidents affect our customers, we seek to understand why they happen. The root cause is often deemed human error, and the all too common management response is to “name, blame, and shame” the person who caused the problem.† And, either subtly or explicitly, management hints that the person guilty of committing the error

Large amounts of fear and threat characterize pathological organizations. People often hoard information, withhold it for political reasons, or distort it to make themselves look better. Failure is often hidden.

Rules and processes characterize bureaucratic organizations, often to help individual departments maintain their “turf.” Failure is processed through a system of judgment, resulting in either punishment or justice and mercy.

Generative organizations are characterized by actively seeking and sharing information to enable the organization to achieve its mission. Responsibilities are shared throughout the value stream, and failure results in reflection and genuine inquiry.

INSTITUTIONALIZE THE IMPROVEMENT OF DAILY WORK

Teams are often unable or unwilling to improve the processes they operate within. The result is that they continue to suffer from their current problems, but their suffering also grows worse over time. Mike Rother observed in Toyota Kata that in the absence of improvements, processes don’t stay the same—due to chaos and entropy, processes degrade over time.

In the technology value stream, when we avoid fixing our problems,

relying on daily workarounds, our problems and technical debt accumulate until all we do is perform workarounds, trying to

avoid disaster, with no cycles left over for doing productive work. This

is why Mike Orzen, author of Lean IT, observed, “Even more important

than daily work is the improvement of daily work.”

We improve daily work by explicitly reserving time to pay down technical debt, fix defects, and refactor and improve problematic areas of our code and environments—we do this by reserving cycles in each development interval or by scheduling kaizen blitzes, which are periods when engineers self-organize into teams to work on fixing any problem they want.

The result of these practices is that everyone always finds and fixes problems in their area of control as part of their daily work. When we finally fix the daily problems we’ve worked around for months (or years), we can eradicate the less apparent problems from our system. By detecting and responding to these ever-weaker failure signals, we fix problems when it is easier and cheaper and when the consequences are negligible.

TRANSFORM LOCAL DISCOVERIES INTO GLOBAL IMPROVEMENTS

When new learnings are discovered locally, there must also be some mechanism to enable the rest of the organization to use and benefit from that knowledge. In other words, when teams or individuals have experiences that create expertise, our goal is to convert that tacit knowledge (i.e., the knowledge that is difficult to transfer to another person using writing it down or verbalizing) into explicit, codified knowledge which becomes someone else’s expertise through practice. This ensures that when anyone else does similar work, they do so with the cumulative and collective experience of everyone in the organization who has ever done the same work. A remarkable example of turning local knowledge into global knowledge is the US Navy’s Nuclear Power Propulsion Program (also known as “NR” for “Naval Reactors”), which has over 5,700 reactor-years of operation without a single reactor-related casualty or escape of radiation.

The NR is known for its intense commitment to scripted procedures, standardized work, and the need for incident reports for any departure from the procedure or normal operations to accumulate learnings, no matter how minor the failure signal—they constantly update procedures and system designs based on these learnings.

The result is that when a new crew sets out to sea on their first deployment, they and their officers benefit from the collective knowledge of 5,700 accident-free reactor years. Equally impressive is that their experiences at sea will be added to this collective knowledge, helping future crews safely achieve their missions.

In the technology value stream, we must create similar mechanisms to create global knowledge, such as making all our blameless post-mortem reports searchable by teams trying to solve similar problems and by creating shared source code repositories that span the entire organization, where shared code, libraries, and configurations that embody the best collective knowledge of the entire organization can be efficiently utilized. All these mechanisms help convert individual expertise into artefacts that the rest of the organization can use.

INJECT RESILIENCE PATTERNS INTO OUR DAILY WORK

Lower-performing manufacturing organizations buffer themselves from disruptions in many ways—in other words, they bulk up or add flab. For instance, managers may choose to stockpile more inventory at each work centre to reduce the risk of a work centre being idle (due to inventory arriving late, inventory that had to be scrapped, etc.). However, that inventory buffer also increases WIP, which has all sorts of undesired outcomes, as previously discussed.

Similarly, managers may increase capacity by buying more capital equipment, hiring more people, or even increasing floor space to reduce the risk of a work centre going down due to machinery failure. All these options increase costs.

In contrast, high performers achieve the same results (or better) by improving daily operations, continually introducing tension to elevate performance, and engineering more resilience into their system.

Consider a typical experiment at one of Aisin Seiki Global’s mattress factories, one of Toyota’s top suppliers. Suppose they had two production lines, each capable of producing one hundred daily units. On slow days, they would send all production onto one line, experimenting with ways to increase capacity and identify vulnerabilities in their process, knowing that if overloading the line caused it to fail, they could send all production to the second line.

They could continually increase capacity by relentless and constant experimentation in their daily work, often without adding new equipment or hiring more people. The emergent pattern that results from these types of improvement rituals improves performance and resilience because the organization is always in a state of tension and change. This process of applying stress to increase resilience was named antifragility by author and risk analyst Nassim Nicholas Taleb.

In the technology value stream, we can introduce the same type of

tension into our systems by constantly seeking to reduce deployment lead times, increase test coverage, decrease test execution times, and even

re-architecting if necessary to increase developer productivity or

increase reliability.

We may also perform Game Day exercises, where we rehearse large-scale

failures, such as turning off entire data centres. Or we may inject

ever-larger scale faults into the production environment (such as the

famous Netflix “Chaos Monkey,” which randomly kills processes and

compute servers in production) to ensure we’re as resilient as we

want.

LEADERS REINFORCE A LEARNING CULTURE

Traditionally, leaders were expected to be responsible for setting objectives, allocating resources for achieving those objectives, and establishing the right combination of incentives. Leaders also establish the emotional tone for the organizations they lead. In other words, leaders lead by “making all the right decisions.”

However, significant evidence shows that leaders do not achieve greatness by making all the right decisions. Instead, the leader’s role is to create the conditions so their team can discover greatness in their daily work. In other words, creating greatness requires leaders and workers, each mutually dependent upon the other.

Jim Womack, the author of Gemba Walks, described the complementary working relationship and mutual respect that must occur between leaders and frontline workers. According to Womack, this relationship is necessary because neither can solve problems alone—leaders are not close enough to work, which is required to solve any problem. Frontline workers do not have the broader organizational context or the authority to make changes outside their work area.§

Leaders must elevate the value of learning and disciplined problem-solving. Mike Rother formalized these methods in what he calls the coaching kata. The result mirrors the scientific method, where we explicitly state our True North goals, such as “sustain zero accidents” in the case of

Alcoa or “double throughput within a year” in the case of Aisin.

These strategic goals then inform the creation of iterative, shorter-term goals, which are cascaded and executed by establishing target conditions at the value stream or work centre level (e.g., “reduce lead time by 10% within the next two weeks”).

These target conditions frame the scientific experiment: we explicitly state the problem we seek to solve, our hypothesis of how our proposed countermeasure will solve it, our methods for testing that hypothesis, our interpretation of the results, and our use of learnings to inform the next iteration.

The leader helps coach the person experimenting with questions that may include: What was your last step, and what happened?

What did you learn?

What is your condition now?

What is your following target condition?

What obstacle are you working on now?

What is your next step?

What is your expected outcome?

When can we check?

This problem-solving approach in which leaders help workers see and solve problems in their daily work is at the core of the Toyota Production System, learning organizations, the Improvement Kata, and high-reliability organizations. Mike Rother observes that he sees Toyota “as an organization defined primarily by the unique behaviour routines it continually teaches to all its members.”

This scientific approach and iterative method guide our internal improvement processes in the technology value stream. We also experiment to ensure our products help our internal and external customers achieve their goals.

Desired Situation: Imagine a World Where Dev and Ops Become DevOps

Imagine a world where product owners, Development, QA, IT Operations, and Infosec work together to help each other and ensure that the overall organization succeeds. By working toward a common goal, they enable the fast flow of planned work into production (e.g., performing tens, hundreds or even thousands of code deploys daily) while achieving world-class stability, reliability, availability, and security. In this world, cross-functional teams rigorously test their hypotheses of which features will most delight users and advance the organizational goals. They are not just about implementing user features but also actively ensuring their work flows smoothly and frequently through the entire value stream without causing chaos and disruption to IT Operations or any other internal or external customer. Simultaneously, QA, IT Operations, and Infosec are always working on ways to reduce friction for the team, creating work systems that enable developers to be more productive and get better outcomes. By adding the expertise of QA, IT Operations, and Infosec into delivery teams and automated self-service tools and platforms, teams can use that expertise in their daily work without being dependent on other teams. This enables organizations to create a safe system of work where small teams can quickly and independently develop, test, and deploy code and value quickly, safely, securely, and reliably to customers. This allows organizations to maximize developer productivity, enable organizational learning, create high employee satisfaction, and win in the marketplace. These are the outcomes that result from DevOps. For most of us, this is not the world we live in. Our system is often broken, resulting in inferior outcomes that fall well short of our true potential. In our world, Development and IT Operations are adversaries; testing and Infosec activities happen only at the end of a project; too late to correct any problems found, and almost any critical activity requires too much manual effort and too many handoffs, leaving us always to be waiting. Not only does this contribute to highly long lead times to get anything done, but the quality of our work, especially production deployments, is also problematic and chaotic, negatively impacting our customers and our business.